READING SUBTLY

This

was the domain of my Blogger site from 2009 to 2018, when I moved to this domain and started

The Storytelling Ape

. The search option should help you find any of the old posts you're looking for.

Why Tamsin Shaw Imagines the Psychologists Are Taking Power

Upon first reading Shaw’s piece, I dismissed it as a particularly unscrupulous bit of interdepartmental tribalism—a philosopher bemoaning the encroachment by pesky upstart scientists into what was formerly the bailiwick of philosophers. But then a line in Shaw’s attempted rebuttal of Haidt and Pinker’s letter sent me back to the original essay, and this time around I recognized it as a manifestation of a more widespread trend among scholars, and a rather unscholarly one at that.

Tamsin Shaw’s essay in the February 25th issue of The New York Review of Books, provocatively titled “The Psychologists Take Power,” is no more scholarly than your average political attack ad, nor is it any more credible. (The article is available online, but I won’t lend it further visibility to search engines by linking to it here.) Two of the psychologists maligned in the essay, Jonathan Haidt and Steven Pinker, recently contributed a letter to the editors which effectively highlights Shaw’s faulty reasoning and myriad distortions, describing how she “prosecutes her case by citation-free attribution, spurious dichotomies, and standards of guilt by association that make Joseph McCarthy look like Sherlock Holmes” (82).

Upon first reading Shaw’s piece, I dismissed it as a particularly unscrupulous bit of interdepartmental tribalism—a philosopher bemoaning the encroachment by pesky upstart scientists into what was formerly the bailiwick of philosophers. But then a line in Shaw’s attempted rebuttal of Haidt and Pinker’s letter sent me back to the original essay, and this time around I recognized it as a manifestation of a more widespread trend among scholars, and a rather unscholarly one at that.

Shaw begins her article by accusing a handful of psychologists of exceeding the bounds of their official remit. These researchers have risen to prominence in recent years through their studies into human morality. But now, instead of restricting themselves, as responsible scientists would, to describing how we make moral judgements and attempting to explain why we respond to moral dilemmas the way we do, these psychologists have begun arrogating moral authority to themselves. They’ve begun, in other words, trying to tell us how we should reason morally—according to Shaw anyway. Her article then progresses through shady innuendo and arguments based on what Haidt and Pinker call “guilt through imaginability” to connect this group of authors to the CIA’s program of “enhanced interrogation,” i.e. torture, which culminated in such atrocities as those committed in the prisons at Abu Ghraib and Guantanamo Bay.

Shaw’s sole piece of evidence comes from a report that was commissioned by the American Psychological Association. David Hoffman and his fellow investigators did indeed find that two members of the APA played a critical role in developing the interrogation methods used by the CIA, and they had the sanction of top officials. Neither of the two, however, and none of those officials authored any of the books on moral psychology that Shaw is supposedly reviewing. In the report’s conclusion, the investigators describe the responses of clinical psychologists who “feel physically sick when they think about the involvement of psychologists intentionally using harsh interrogation techniques.” Shaw writes,

It is easy to imagine the psychologists who claim to be moral experts dismissing such a reaction as an unreliable “gut response” that must be overridden by more sophisticated reasoning. But a thorough distrust of rapid, emotional responses might well leave human beings without a moral compass sufficiently strong to guide them through times of crisis, when our judgement is most severely challenged, or to compete with powerful nonmoral motivations. (39)

What she’s referring to here is the two-system model of moral reasoning which posits a rapid, intuitive system, programmed in large part by our genetic inheritance but with some cultural variation in its expression, matched against a more effort-based, cerebral system that requires the application of complex reasoning.

But it must be noted that nowhere does any of the authors she’s reviewing make a case for a “thorough distrust of rapid, emotional responses.” Their positions are far more nuanced, and Haidt in fact argues in his book The Righteous Mind that liberals could benefit from paying more heed to some of their moral instincts—a case that Shaw herself summarizes in her essay when she’s trying to paint him as an overly “didactic” conservative.

Haidt and Pinker’s response to Shaw’s argument by imaginability was to simply ask the other five authors she insinuates support torture whether they indeed reacted the way she describes. They write, “The results: seven out of seven said ‘no’” (82). These authors’ further responses to the question offer a good opportunity to expose just how off-base Shaw’s simplistic characterizations are.

None of these psychologists believes that a reaction of physical revulsion must be overridden or should be thoroughly distrusted. But several pointed out that in the past, people have felt physically sick upon contemplating homosexuality, interracial marriage, vaccination, and other morally unexceptionable acts, so gut feelings alone cannot constitute a “moral compass.” Nor is the case against “enhanced interrogation” so fragile, as Shaw implies, that it has to rest on gut feelings: the moral arguments against torture are overwhelming. So while primitive physical revulsion may serve as an early warning signal indicating that some practice calls for moral scrutiny, it is “the more sophisticated reasoning” that should guide us through times of crisis. (82-emphasis in original)

One phrase that should stand out here is “the moral arguments against torture are overwhelming.” Shaw is supposedly writing about a takeover by psychologists who advocate torture—but none of them actually advocates torture. And, having read four of the six books she covers, I can aver that this response was entirely predictable based on what the authors had written. So why does Shaw attempt to mislead her readers?

The false implication that the authors she’s reviewing support torture isn’t the only central premise of Shaw’s essay that’s simply wrong; if these psychologists really are trying to take power, as she claims, that’s news to them. Haidt and Pinker begin their rebuttal by pointing out that “Shaw can cite no psychologist who claims special authority or ‘superior wisdom’ on moral matters” (82). Every one of them, with a single exception, in fact includes an explanation of what separates the two endeavors—describing human morality on the one hand, and prescribing values or behaviors on the other—in the very books Shaw professes to find so alarming. The lone exception, Yale psychologist Paul Bloom, author of Just Babies: The Origins of Good and Evil, wrote to Haidt and Pinker, “The fact that one cannot derive morality from psychological research is so screamingly obvious that I never thought to explicitly write it down” (82).

Yet Shaw insists all of these authors commit the fallacy of moving from is to ought; you have to wonder if she even read the books she’s supposed to be reviewing—beyond mining them for damning quotes anyway. And didn’t any of the editors at The New York Review think to check some of her basic claims? Or were they simply hoping to bank on the publication of what amounts to controversy porn? (Think of the dilemma faced by the authors: do you respond and draw more attention to the piece, or do you ignore it and let some portion of the readership come away with a wildly mistaken impression?)

Haidt and Pinker do a fine job of calling out most of Shaw’s biggest mistakes and mischaracterizations. But I want to draw attention to two more instances of her falling short of any reasonable standard of scholarship, because each one reveals something important about the beliefs Shaw uses as her own moral compass. The authors under review situate their findings on human morality in a larger framework of theories about human evolution. Shaw characterizes this framework as “an unverifiable and unfalsifiable story about evolutionary psychology” (38). Shaw has evidently attended the Ken Ham school of evolutionary biology, which preaches that science can only concern itself with phenomena occurring right before our eyes in a lab. The reality is that, while testing adaptationist theories is a complicated endeavor, there are usually at least two ways to falsify them. You can show that the trait or behavior in question is absent in many cultures, or you can show that it emerges late in life after some sort of deliberate training. One of the books Shaw is supposedly reviewing, Bloom’s Just Babies, focuses specifically on research demonstrating that many of our common moral intuitions emerge when we’re babies, in our first year of life, with no deliberate training whatsoever.

Bloom comes in for some more targeted, if off-hand, criticism near the conclusion of Shaw’s essay for an article he wrote to challenge the increasingly popular sentiment that we can solve our problems as a society by encouraging everyone to be more empathetic. Empathy, Bloom points out, is a finite resource; we’re simply not capable of feeling for every single one of the millions of individuals in need of care throughout the world. So we need to offer that care based on principle, not feeling. Shaw avoids any discussion of her own beliefs about morality in her essay, but from the nature of her mischaracterization of Bloom’s argument we can start to get a sense of the ideology informing her prejudices. She insists that when Paul Bloom, in his own Atlantic article, “The Dark Side of Empathy,” warns us that empathy for people who are seen as victims may be associated with violent, punitive tendencies toward those in authority, we should be wary of extrapolating from his psychological claims a prescription for what should and should not be valued, or inferring that we need a moral corrective to a culture suffering from a supposed excess of empathic feelings. (40-1)

The “supposed excess of empathic feelings” isn’t the only laughable distortion people who actually read Bloom’s essay will catch out; the actual examples he cites of when empathy for victims leads to “violent, punitive tendencies” include Donald Trump and Ann Coulter stoking outrage against undocumented immigrants by telling stories of the crimes a few of them commit. This misrepresentation raises an important question: why would Shaw want to mislead her readers into believing Bloom’s intention is to protect those in authority? This brings us to the McCathyesque part of Shaw’s attack ad.

The sections of the essay drawing a web of guilt connecting the two psychologists who helped develop torture methods for the CIA to all the authors she’d have us believe are complicit focus mainly on Martin Seligman, whose theory of learned helplessness formed the basis of the CIA’s approach to harsh interrogation. Seligman is the founder of a subfield called Positive Psychology, which he developed as a counterbalance to what he perceived as an almost exclusive focus on all that can go wrong with human thinking, feeling, and behaving. His Positive Psychology Center at the University of Pennsylvania has received $31 million in recent years from the Department of Defense—a smoking gun by Shaw’s lights. And Seligman even admits that on several occasions he met with those two psychologists who participated in the torture program. The other authors Shaw writes about have in turn worked with Seligman on a variety of projects. Haidt even wrote a book on Positive Psychology called The Happiness Hypothesis.

In Shaw’s view, learned helplessness theory is a potentially dangerous tool being wielded by a bunch of mad scientists and government officials corrupted by financial incentives and a lust for military dominance. To her mind, the notion that Seligman could simply want to help soldiers cope with the stresses of combat is all but impossible to even entertain. In this and every other instance when Shaw attempts to mislead her readers, it’s to put the same sort of negative spin on the psychologists’ explicitly stated positions. If Bloom says empathy has a dark side, then all the authors in question are against empathy. If Haidt argues that resilience—the flipside of learned helplessness—is needed to counteract a culture of victimhood, then all of these authors are against efforts to combat sexism and racism on college campuses. And, as we’ve seen, if these authors say we should question our moral intuitions, it’s because they want to be able to get away with crimes like torture. “Expertise in teaching people to override their moral intuitions is only a moral good if it serves good ends,” Shaw herself writes. “Those ends,” she goes on, “should be determined by rigorous moral deliberation” (40). Since this is precisely what the authors she’s criticizing say in their books, we’re left wondering what her real problem with them might be.

In her reply to Haidt and Pinker’s letter, Shaw suggests her aim for the essay was to encourage people to more closely scrutinize the “doctrines of Positive Psychology” and the central principles underlying psychological theories about human morality. I was curious to see how she’d respond to being called out for mistakenly stating that the psychologists were claiming moral authority and that they were given to using their research to defend the use of torture. Her main response is to repeat the central aspects of her rather flimsy case against Seligman. But then she does something truly remarkable; she doesn’t deny using guilt by imaginability—she defends it.

Pinker and Haidt say they prefer reality to imagination, but imagination is the capacity that allows us to take responsibility, insofar as it is ever possible, for the ends for which our work will be used and the consequences that it will have in the world. Such imagination is a moral and intellectual virtue that clearly needs to be cultivated. (85)

So, regardless of what the individual psychologists themselves explicitly say about torture, for instance, as long as they’re equipping other people with the conceptual tools to justify torture, they’re still at least somewhat complicit. This was the line that first made me realize Shaw’s essay was something other than a philosopher munching on sour grapes.

Shaw’s approach to connecting each of the individual authors to Seligman and then through him to the torture program is about as sophisticated, and about as credible, as any narrative concocted by your average online conspiracy theorist. But she believes that these connections are important and meaningful, a belief, I suspect, that derives from her own philosophy. Advocates of this philosophy, commonly referred to as postmodernismor poststructuralism, posit that our culture is governed by a dominant ideology that serves to protect and perpetuate the societal status quo, especially with regard to what are referred to as hegemonic relationships—men over women, whites over other ethnicities, heterosexuals over homosexuals. This dominant ideology finds expression in, while at the same time propagating itself through, cultural practices ranging from linguistic expressions to the creation of art to the conducting of scientific experiments.

Inspired by figures like Louis Althusser and Michel Foucault, postmodern scholars reject many of the central principles of humanism, including its emphasis on the role of rational discourse in driving societal progress. This is because the processes of reasoning and research that go into producing knowledge can never be fully disentangled from the exercise of power, or so it is argued. We experience the world through the medium of culture, and our culture distorts reality in a way that makes hierarchies seem both natural and inevitable. So, according to postmodernists, not only does science fail to create true knowledge of the natural world and its inhabitants, but the ideas it generates must also be scrutinized to identify their hidden political implications.

What such postmodern textual analyses look like in practice is described in sociologist Ullica Segerstrale’s book, Defenders of the Truth: The Sociobiology Debate. Segerstrale observed that postmodern critics of evolutionary psychology (which was more commonly called sociobiology in the late 90s), were outraged by what they presumed were the political implications of the theories, not by what evolutionary psychologists actually wrote. She explains,

In their analysis of their targets’ texts, the critics used a method I call moral reading. The basic idea behind moral reading was to imagine the worst possible political consequences of a scientific claim. In this way, maximum guilt might be attributed to the perpetrator of this claim. (206)

This is similar to the type of imagination Shaw faults psychologists today for insufficiently exercising. For the postmodernists, the sum total of our cultural knowledge is what sustains all the varieties of oppression and injustice that exist in our society, so unless an author explicitly decries oppression or injustice he’ll likely be held under suspicion. Five of the six books Shaw subjects to her moral reading were written by white males. The sixth was written by a male and a female, both white. The people the CIA tortured were not white. So you might imagine white psychologists telling everyone not to listen to their conscience to make it easier for them reap the benefits of a history of colonization. Of course, I could be completely wrong here; maybe this scenario isn’t what was playing out in Shaw’s imagination at all. But that’s the problem—there are few limits to what any of us can imagine, especially when it comes to people we disagree with on hot-button issues.

Postmodernism began in English departments back in the ‘60s where it was originally developed as an approach to analyzing literature. From there, it spread to several other branches of the humanities and is now making inroads into the social sciences. Cultural anthropology was the first field to be mostly overtaken. You can see precursors to Shaw’s rhetorical approach in attacks leveled against sociobiologists like E.O. Wilson and Napoleon Chagnon by postmodern anthropologists like Marshall Sahlins. In a review published in 2001, also in The New York Review of Books, Sahlins writes,

The ‘60s were the longest decade of the 20th century, and Vietnam was the longest war. In the West, the war prolonged itself in arrogant perceptions of the weaker peoples as instrumental means of the global projects of the stronger. In the human sciences, the war persists in an obsessive search for power in every nook and cranny of our society and history, and an equally strong postmodern urge to “deconstruct” it. For his part, Chagnon writes popular textbooks that describe his ethnography among the Yanomami in the 1960s in terms of gaining control over people.

Demonstrating his own power has been not only a necessary condition of Chagnon’s fieldwork, but a main technique of investigation.

The first thing to note is that Sahlin’s characterization of Chagnon’s books as narratives of “gaining control over people” is just plain silly; Chagnon was more often than not at the mercy of the Yanomamö. The second is that, just as anyone who’s actually read the books by Haidt, Pinker, Greene, and Bloom will be shocked by Shaw’s claim that their writing somehow bolsters the case for torture, anyone familiar with Chagnon’s studies of the Yanomamö will likely wonder what the hell they have to do with Vietnam, a war that to my knowledge he never expressed an opinion of in writing.

However, according to postmodern logic—or we might say postmodern morality—Chagnon’s observation that the Yanomamö were often violent, along with his espousal of a theory that holds such violence to have been common among preindustrial societies, leads inexorably to the conclusion that he wants us all to believe violence is part of our fixed nature as humans. Through the lens of postmodernism, Chagnon’s work is complicit in making people believe working for peace is futile because violence is inevitable. Chagnon may counter that he believes violence is likely to occur only in certain circumstances, and that by learning more about what conditions lead to conflict we can better equip ourselves to prevent it. But that doesn’t change the fact that society needs high-profile figures to bring before our modern academic version of the inquisition, so that all the other white men lording it over the rest of the world will see what happens to anyone who deviates from right (actually far-left) thinking.

Ideas really do have consequences of course, some of which will be unforeseen. The place where an idea ends up may even be repugnant to its originator. But the notion that we can settle foreign policy disputes, eradicate racism, end gender inequality, and bring about world peace simply by demonizing artists and scholars whose work goes against our favored party line, scholars and artists who maybe can’t be shown to support these evils and injustices directly but can certainly be imagined to be doing so in some abstract and indirect way—well, that strikes me as far-fetched. It also strikes me as dangerously misguided, since it’s not like scholars, or anyone else, ever needed any extra encouragement to imagine people who disagree with them being guilty of some grave moral offense. We’re naturally tempted to do that as it is.

Part of becoming a good scholar—part of becoming a grownup—is learning to live with people whose beliefs are different from yours, and to treat them fairly. Unless a particular scholar is openly and explicitly advocating torture, ascribing such an agenda to her is either irresponsible, if we’re unwittingly misrepresenting her, or dishonest, if we’re doing so knowingly. Arguments from imagined adverse consequences can go both ways. We could, for instance, easily write articles suggesting that Shaw is a Stalinist, or that she advocates prosecuting perpetrators of what members of the far left deem to be thought crimes. What about the consequences of encouraging suspicion of science in an age of widespread denial of climate change? Postmodern identity politics is this moment posing a threat to free speech on college campuses. And the tactics of postmodern activists begin and end with the stoking of moral outrage, so we could easily make a case that the activists are deliberately trying to instigate witch hunts. With each baseless accusation and counter-accusation, though, we’re getting farther and farther away from any meaningful inquiry, forestalling any substantive debate, and hamstringing any real moral or political progress.

Many people try to square the circle, arguing that postmodernism isn’t inherently antithetical to science, and that the supposed insights derived from postmodern scholarship ought to be assimilated somehow into science. When Thomas Huxley, the physician and biologist known as Darwin’s bulldog, said that science “commits suicide when it adopts a creed,” he was pointing out that by adhering to an ideology you’re taking its tenets for granted. Science, despite many critics’ desperate proclamations to the contrary, is not itself an ideology; science is an epistemology, a set of principles and methods for investigating nature and arriving at truths about the world. Even the most well-established of these truths, however, is considered provisional, open to potential revision or outright rejection as the methods, technologies, and theories that form the foundation of this collective endeavor advance over the generations.

In her essay, Shaw cites the results of a project attempting to replicate the findings of several seminal experiments in social psychology, counting the surprisingly low success rate as further cause for skepticism of the field. What she fails to appreciate here is that the replication project is being done by a group of scientists who are psychologists themselves, because they’re committed to honing their techniques for studying the human mind. I would imagine if Shaw’s postmodernist precursors had shared a similar commitment to assessing the reliability of their research methods, such as they are, and weighing the validity of their core tenets, then the ideology would have long since fallen out of fashion by the time she was taking up a pen to write about how scary psychologists are.

The point Shaw's missing here is that it’s precisely this constant quest to check and recheck the evidence, refine and further refine the methods, test and retest the theories, that makes science, if not a source of superior wisdom, then still the most reliable approach to answering questions about who we are, what our place is in the universe, and what habits and policies will give us, as individuals and as citizens, the best chance to thrive and flourish. As Saul Perlmutter, one of the discoverers of dark energy, has said, “Science is an ongoing race between our inventing ways to fool ourselves, and our inventing ways to avoid fooling ourselves.” Shaw may be right that no experimental result could ever fully settle a moral controversy, but experimental results are often not just relevant to our philosophical deliberations but critical to keeping those deliberations firmly grounded in reality.

Popular relevant posts:

JUST ANOTHER PIECE OF SLEAZE: THE REAL LESSON OF ROBERT BOROFSKY'S "FIERCE CONTROVERSY"

THE IDIOCY OF OUTRAGE: SAM HARRIS'S RUN-INS WITH BEN AFFLECK AND NOAM CHOMSKY

(My essay on Greene’s book)

LAB FLIES: JOSHUA GREENE’S MORAL TRIBES AND THE CONTAMINATION OF WALTER WHITE

(My essay on Pinker’s book)

(My essay on Haidt’s book)

THE ENLIGHTENED HYPOCRISY OF JONATHAN HAIDT'S RIGHTEOUS MIND

Lab Flies: Joshua Greene’s Moral Tribes and the Contamination of Walter White

Joshua Greene’s book “Moral Tribes” posits a dual-system theory of morality, where a quick, intuitive system 1 makes judgments based on deontological considerations—”it’s just wrong—whereas the slower, more deliberative system 2 takes time to calculate the consequences of any given choice. Audiences can see these two systems on display in the series “Breaking Bad,” as well as in critics’ and audiences’ responses.

Walter White’s Moral Math

In an episode near the end of Breaking Bad’s fourth season, the drug kingpin Gus Fring gives his meth cook Walter White an ultimatum. Walt’s brother-in-law Hank is a DEA agent who has been getting close to discovering the high-tech lab Gus has created for Walt and his partner Jesse, and Walt, despite his best efforts, hasn’t managed to put him off the trail. Gus decides that Walt himself has likewise become too big a liability, and he has found that Jesse can cook almost as well as his mentor. The only problem for Gus is that Jesse, even though he too is fed up with Walt, will refuse to cook if anything happens to his old partner. So Gus has Walt taken at gunpoint to the desert where he tells him to stay away from both the lab and Jesse. Walt, infuriated, goads Gus with the fact that he’s failed to turn Jesse against him completely, to which Gus responds, “For now,” before going on to say,

In the meantime, there’s the matter of your brother-in-law. He is a problem you promised to resolve. You have failed. Now it’s left to me to deal with him. If you try to interfere, this becomes a much simpler matter. I will kill your wife. I will kill your son. I will kill your infant daughter.

In other words, Gus tells Walt to stand by and let Hank be killed or else he will kill his wife and kids. Once he’s released, Walt immediately has his lawyer Saul Goodman place an anonymous call to the DEA to warn them that Hank is in danger. Afterward, Walt plans to pay a man to help his family escape to a new location with new, untraceable identities—but he soon discovers the money he was going to use to pay the man has already been spent (by his wife Skyler). Now it seems all five of them are doomed. This is when things get really interesting.

Walt devises an elaborate plan to convince Jesse to help him kill Gus. Jesse knows that Gus would prefer for Walt to be dead, and both Walt and Gus know that Jesse would go berserk if anyone ever tried to hurt his girlfriend’s nine-year-old son Brock. Walt’s plan is to make it look like Gus is trying to frame him for poisoning Brock with risin. The idea is that Jesse would suspect Walt of trying to kill Brock as punishment for Jesse betraying him and going to work with Gus. But Walt will convince Jesse that this is really just Gus’s ploy to trick Jesse into doing what he has forbidden Gus to do up till now—and kill Walt himself. Once Jesse concludes that it was Gus who poisoned Brock, he will understand that his new boss has to go, and he will accept Walt’s offer to help him perform the deed. Walt will then be able to get Jesse to give him the crucial information he needs about Gus to figure out a way to kill him.

It’s a brilliant plan. The one problem is that it involves poisoning a nine-year-old child. Walt comes up with an ingenious trick which allows him to use a less deadly poison while still making it look like Brock has ingested the ricin, but for the plan to work the boy has to be made deathly ill. So Walt is faced with a dilemma: if he goes through with his plan, he can save Hank, his wife, and his two kids, but to do so he has to deceive his old partner Jesse in just about the most underhanded way imaginable—and he has to make a young boy very sick by poisoning him, with the attendant risk that something will go wrong and the boy, or someone else, or everyone else, will die anyway. The math seems easy: either four people die, or one person gets sick. The option recommended by the math is greatly complicated, however, by the fact that it involves an act of violence against an innocent child.

In the end, Walt chooses to go through with his plan, and it works perfectly. In another ingenious move, though, this time on the part of the show’s writers, Walt’s deception isn’t revealed until after his plan has been successfully implemented, which makes for an unforgettable shock at the end of the season. Unfortunately, this revelation after the fact, at a time when Walt and his family are finally safe, makes it all too easy to forget what made the dilemma so difficult in the first place—and thus makes it all too easy to condemn Walt for resorting to such monstrous means to see his way through.

Fans of Breaking Bad who read about the famous thought-experiment called the footbridge dilemma in Harvard psychologist Joshua Greene’s multidisciplinary and momentously important book Moral Tribes: Emotion, Reason, and the Gap between Us and Them will immediately recognize the conflicting feelings underlying our responses to questions about serving some greater good by committing an act of violence. Here is how Greene describes the dilemma:

A runaway trolley is headed for five railway workmen who will be killed if it proceeds on its present course. You are standing on a footbridge spanning the tracks, in between the oncoming trolley and the five people. Next to you is a railway workman wearing a large backpack. The only way to save the five people is to push this man off the footbridge and onto the tracks below. The man will die as a result, but his body and backpack will stop the trolley from reaching the others. (You can’t jump yourself because you, without a backpack, are not big enough to stop the trolley, and there’s no time to put one on.) Is it morally acceptable to save the five people by pushing this stranger to his death? (113-4)

As was the case for Walter White when he faced his child-poisoning dilemma, the math is easy: you can save five people—strangers in this case—through a single act of violence. One of the fascinating things about common responses to the footbridge dilemma, though, is that the math is all but irrelevant to most of us; no matter how many people we might save, it’s hard for us to see past the murderous deed of pushing the man off the bridge. The answer for a large majority of people faced with this dilemma, even in the case of variations which put the number of people who would be saved much higher than five, is no, pushing the stranger to his death is not morally acceptable.

Another fascinating aspect of our responses is that they change drastically with the modification of a single detail in the hypothetical scenario. In the switch dilemma, a trolley is heading for five people again, but this time you can hit a switch to shift it onto another track where there happens to be a single person who would be killed. Though the math and the underlying logic are the same—you save five people by killing one—something about pushing a person off a bridge strikes us as far worse than pulling a switch. A large majority of people say killing the one person in the switch dilemma is acceptable. To figure out which specific factors account for the different responses, Greene and his colleagues tweak various minor details of the trolley scenario before posing the dilemma to test participants. By now, so many experiments have relied on these scenarios that Greene calls trolley dilemmas the fruit flies of the emerging field known as moral psychology.

The Automatic and Manual Modes of Moral Thinking

One hypothesis for why the footbridge case strikes us as unacceptable is that it involves using a human being as an instrument, a means to an end. So Greene and his fellow trolleyologists devised a variation called the loop dilemma, which still has participants pulling a hypothetical switch, but this time the lone victim on the alternate track must stop the trolley from looping back around onto the original track. In other words, you’re still hitting the switch to save the five people, but you’re also using a human being as a trolley stop. People nonetheless tend to respond to the loop dilemma in much the same way they do the switch dilemma. So there must be some factor other than the prospect of using a person as an instrument that makes the footbridge version so objectionable to us.

Greene’s own theory for why our intuitive responses to these dilemmas are so different begins with what Daniel Kahneman, one of the founders of behavioral economics, labeled the two-system model of the mind. The first system, a sort of autopilot, is the one we operate in most of the time. We only use the second system when doing things that require conscious effort, like multiplying 26 by 47. While system one is quick and intuitive, system two is slow and demanding. Greene proposes as an analogy the automatic and manual settings on a camera. System one is point-and-click; system two, though more flexible, requires several calibrations and adjustments. We usually only engage our manual systems when faced with circumstances that are either completely new or particularly challenging.

According to Greene’s model, our automatic settings have functions that go beyond the rapid processing of information to encompass our basic set of moral emotions, from indignation to gratitude, from guilt to outrage, which motivates us to behave in ways that over evolutionary history have helped our ancestors transcend their selfish impulses to live in cooperative societies. Greene writes,

According to the dual-process theory, there is an automatic setting that sounds the emotional alarm in response to certain kinds of harmful actions, such as the action in the footbridge case. Then there’s manual mode, which by its nature tends to think in terms of costs and benefits. Manual mode looks at all of these cases—switch, footbridge, and loop—and says “Five for one? Sounds like a good deal.” Because manual mode always reaches the same conclusion in these five-for-one cases (“Good deal!”), the trend in judgment for each of these cases is ultimately determined by the automatic setting—that is, by whether the myopic module sounds the alarm. (233)

What makes the dilemmas difficult then is that we experience them in two conflicting ways. Most of us, most of the time, follow the dictates of the automatic setting, which Greene describes as myopic because its speed and efficiency come at the cost of inflexibility and limited scope for extenuating considerations.

The reason our intuitive settings sound an alarm at the thought of pushing a man off a bridge but remain silent about hitting a switch, Greene suggests, is that our ancestors evolved to live in cooperative groups where some means of preventing violence between members had to be in place to avoid dissolution—or outright implosion. One of the dangers of living with a bunch of upright-walking apes who possess the gift of foresight is that any one of them could at any time be plotting revenge for some seemingly minor slight, or conspiring to get you killed so he can move in on your spouse or inherit your belongings. For a group composed of individuals with the capacity to hold grudges and calculate future gains to function cohesively, the members must have in place some mechanism that affords a modicum of assurance that no one will murder them in their sleep. Greene writes,

To keep one’s violent behavior in check, it would help to have some kind of internal monitor, an alarm system that says “Don’t do that!” when one is contemplating an act of violence. Such an action-plan inspector would not necessarily object to all forms of violence. It might shut down, for example, when it’s time to defend oneself or attack one’s enemies. But it would, in general, make individuals very reluctant to physically harm one another, thus protecting individuals from retaliation and, perhaps, supporting cooperation at the group level. My hypothesis is that the myopic module is precisely this action-plan inspecting system, a device for keeping us from being casually violent. (226)

Hitting a switch to transfer a train from one track to another seems acceptable, even though a person ends up being killed, because nothing our ancestors would have recognized as violence is involved.

Many philosophers cite our different responses to the various trolley dilemmas as support for deontological systems of morality—those based on the inherent rightness or wrongness of certain actions—since we intuitively know the choices suggested by a consequentialist approach are immoral. But Greene points out that this argument begs the question of how reliable our intuitions really are. He writes,

I’ve called the footbridge dilemma a moral fruit fly, and that analogy is doubly appropriate because, if I’m right, this dilemma is also a moral pest. It’s a highly contrived situation in which a prototypically violent action is guaranteed (by stipulation) to promote the greater good. The lesson that philosophers have, for the most part, drawn from this dilemma is that it’s sometimes deeply wrong to promote the greater good. However, our understanding of the dual-process moral brain suggests a different lesson: Our moral intuitions are generally sensible, but not infallible. As a result, it’s all but guaranteed that we can dream up examples that exploit the cognitive inflexibility of our moral intuitions. It’s all but guaranteed that we can dream up a hypothetical action that is actually good but that seems terribly, horribly wrong because it pushes our moral buttons. I know of no better candidate for this honor than the footbridge dilemma. (251)

The obverse is that many of the things that seem morally acceptable to us actually do cause harm to people. Greene cites the example of a man who lets a child drown because he doesn’t want to ruin his expensive shoes, which most people agree is monstrous, even though we think nothing of spending money on things we don’t really need when we could be sending that money to save sick or starving children in some distant country. Then there are crimes against the environment, which always seem to rank low on our list of priorities even though their future impact on real human lives could be devastating. We have our justifications for such actions or omissions, to be sure, but how valid are they really? Is distance really a morally relevant factor when we let children die? Does the diffusion of responsibility among so many millions change the fact that we personally could have a measurable impact?

These black marks notwithstanding, cooperation, and even a certain degree of altruism, come natural to us. To demonstrate this, Greene and his colleagues have devised some clever methods for separating test subjects’ intuitive responses from their more deliberate and effortful decisions. The experiments begin with a multi-participant exchange scenario developed by economic game theorists called the Public Goods Game, which has a number of anonymous players contribute to a common bank whose sum is then doubled and distributed evenly among them. Like the more famous game theory exchange known as the Prisoner’s Dilemma, the outcomes of the Public Goods Game reward cooperation, but only when a threshold number of fellow cooperators is reached. The flip side, however, is that any individual who decides to be stingy can get a free ride from everyone else’s contributions and make an even greater profit. What tends to happen is, over multiple rounds, the number of players opting for stinginess increases until the game is ruined for everyone, a process analogical to a phenomenon in economics known as the Tragedy of the Commons. Everyone wants to graze a few more sheep on the commons than can be sustained fairly, so eventually the grounds are left barren.

The Biological and Cultural Evolution of Morality

Greene believes that humans evolved emotional mechanisms to prevent the various analogs of the Tragedy of the Commons from occurring so that we can live together harmoniously in tight-knit groups. The outcomes of multiple rounds of the Public Goods Game, for instance, tend to be far less dismal when players are given the opportunity to devote a portion of their own profits to punishing free riders. Most humans, it turns out, will be motivated by the emotion of anger to expend their own resources for the sake of enforcing fairness. Over several rounds, cooperation becomes the norm. Such an outcome has been replicated again and again, but researchers are always interested in factors that influence players’ strategies in the early rounds. Greene describes a series of experiments he conducted with David Rand and Martin Nowak, which were reported in an article in Nature in 2012. He writes,

…we conducted our own Public Goods Games, in which we forced some people to decide quickly (less than ten seconds) and forced others to decide slowly (more than ten seconds). As predicted, forcing people to decide faster made them more cooperative and forcing people to slow down made them less cooperative (more likely to free ride). In other experiments, we asked people, before playing the Public Goods Game, to write about a time in which their intuitions served them well, or about a time in which careful reasoning led them astray. Reflecting on the advantages of intuitive thinking (or the disadvantages of careful reflection) made people more cooperative. Likewise, reflecting on the advantages of careful reasoning (or the disadvantages of intuitive thinking) made people less cooperative. (62)

These results offer strong support for Greene’s dual-process theory of morality, and they even hint at the possibility that the manual mode is fundamentally selfish or amoral—in other words, that the philosophers have been right all along in deferring to human intuitions about right and wrong.

As good as our intuitive moral sense is for preventing the Tragedy of the Commons, however, when given free rein in a society comprised of large groups of people who are strangers to one another, each with its own culture and priorities, our natural moral settings bring about an altogether different tragedy. Greene labels it the Tragedy of Commonsense Morality. He explains,

Morality evolved to enable cooperation, but this conclusion comes with an important caveat. Biologically speaking, humans were designed for cooperation, but only with some people. Our moral brains evolved for cooperation within groups, and perhaps only within the context of personal relationships. Our moral brains did not evolve for cooperation between groups (at least not all groups). (23)

Expanding on the story behind the Tragedy of the Commons, Greene describes what would happen if several groups, each having developed its own unique solution for making sure the commons were protected from overgrazing, were suddenly to come into contact with one another on a transformed landscape called the New Pastures. Each group would likely harbor suspicions against the others, and when it came time to negotiate a new set of rules to govern everybody the groups would all show a significant, though largely unconscious, bias in favor of their own members and their own ways.

The origins of moral psychology as a field can be traced to both developmental and evolutionary psychology. Seminal research conducted at Yale’s Infant Cognition Center, led by Karen Wynn, Kiley Hamlin, and Paul Bloom (and which Bloom describes in a charming and highly accessible book called Just Babies), has demonstrated that children as young as six months possess what we can easily recognize as a rudimentary moral sense. These findings suggest that much of the behavior we might have previously ascribed to lessons learned from adults is actually innate. Experiments based on game theory scenarios and thought-experiments like the trolley dilemmas are likewise thought to tap into evolved patterns of human behavior. Yet when University of British Columbia psychologist Joseph Henrich teamed up with several anthropologists to see how people living in various small-scale societies responded to game theory scenarios like the Prisoner’s Dilemma and the Public Goods Game they discovered a great deal of variation. On the one hand, then, human moral intuitions seem to be rooted in emotional responses present at, or at least close to, birth, but on the other hand cultures vary widely in their conventional responses to classic dilemmas. These differences between cultural conceptions of right and wrong are in large part responsible for the conflict Greene envisions in his Parable of the New Pastures.

But how can a moral sense be both innate and culturally variable? “As you might expect,” Greene explains, “the way people play these games reflects the way they live.” People in some cultures rely much more heavily on cooperation to procure their sustenance, as is the case with the Lamelara of Indonesia, who live off the meat of whales they hunt in groups. Cultures also vary in how much they rely on market economies as opposed to less abstract and less formal modes of exchange. Just as people adjust the way they play economic games in response to other players’ moves, people acquire habits of cooperation based on the circumstances they face in their particular societies. Regarding the differences between small-scale societies in common game theory strategies, Greene writes,

Henrich and colleagues found that payoffs to cooperation and market integration explain more than two thirds of the variation across these cultures. A more recent study shows that, across societies, market integration is an excellent predictor of altruism in the Dictator Game. At the same time, many factors that you might expect to be important predictors of cooperative behavior—things like an individual’s sex, age, and relative wealth, or the amount of money at stake—have little predictive power. (72)

In much the same way humans are programmed to learn a language and acquire a set of religious beliefs, they also come into the world with a suite of emotional mechanisms that make up the raw material for what will become a culturally calibrated set of moral intuitions. The specific language and religion we end up with is of course dependent on the social context of our upbringing, just as our specific moral settings will reflect those of other people in the societies we grow up in.

Jonathan Haidt and Tribal Righteousness

In our modern industrial society, we actually have some degree of choice when it comes to our cultural affiliations, and this freedom opens the way for heritable differences between individuals to play a larger role in our moral development. Such differences are nowhere as apparent as in the realm of politics, where nearly all citizens occupy some point on a continuum between conservative and liberal. According to Greene’s fellow moral psychologist Jonathan Haidt, we have precious little control over our moral responses because, in his view, reason only comes into play to justify actions and judgments we’ve already made. In his fascinating 2012 book The Righteous Mind, Haidt insists,

Moral reasoning is part of our lifelong struggle to win friends and influence people. That’s why I say that “intuitions come first, strategic reasoning second.” You’ll misunderstand moral reasoning if you think about it as something people do by themselves in order to figure out the truth. (50)

To explain the moral divide between right and left, Haidt points to the findings of his own research on what he calls Moral Foundations, six dimensions underlying our intuitions about moral and immoral actions. Conservatives tend to endorse judgments based on all six of the foundations, valuing loyalty, authority, and sanctity much more than liberals, who focus more exclusively on care for the disadvantaged, fairness, and freedom from oppression. Since our politics emerge from our moral intuitions and reason merely serves as a sort of PR agent to rationalize judgments after the fact, Haidt enjoins us to be more accepting of rival political groups— after all, you can’t reason with them.

Greene objects both to Haidt’s Moral Foundations theory and to his prescription for a politics of complementarity. The responses to questions representing all the moral dimensions in Haidt’s studies form two clusters on a graph, Greene points out, not six, suggesting that the differences between conservatives and liberals are attributable to some single overarching variable as opposed to several individual tendencies. Furthermore, the specific content of the questions Haidt uses to flesh out the values of his respondents have a critical limitation. Greene writes,

According to Haidt, American social conservatives place greater value on respect for authority, and that’s true in a sense. Social conservatives feel less comfortable slapping their fathers, even as a joke, and so on. But social conservatives do not respect authority in a general way. Rather, they have great respect for authorities recognized by their tribe (from the Christian God to various religious and political leaders to parents). American social conservatives are not especially respectful of Barack Hussein Obama, whose status as a native-born American, and thus a legitimate president, they have persistently challenged. (339)

The same limitation applies to the loyalty and sanctity foundations. Conservatives feel little loyalty toward the atheists and Muslims among their fellow Americans. Nor do they recognize the sanctity of Mosques or Hindu holy texts. Greene goes on,

American social conservatives are not best described as people who place special value on authority, sanctity, and loyalty, but rather as tribal loyalists—loyal to their own authorities, their own religion, and themselves. This doesn’t make them evil, but it does make them parochial, tribal. In this they’re akin to the world’s other socially conservative tribes, from the Taliban in Afghanistan to European nationalists. According to Haidt, liberals should be more open to compromise with social conservatives. I disagree. In the short term, compromise may be necessary, but in the long term, our strategy should not be to compromise with tribal moralists, but rather to persuade them to be less tribalistic. (340)

Greene believes such persuasion is possible, even with regard to emotionally and morally charged controversies, because he sees our manual-mode thinking as playing a potentially much greater role than Haidt sees it playing.

Metamorality on the New Pastures

Throughout The Righteous Mind, Haidt argues that the moral philosophers who laid the foundations of modern liberal and academic conceptions of right and wrong gave short shrift to emotions and intuitions—that they gave far too much credit to our capacity for reason. To be fair, Haidt does honor the distinction between descriptive and prescriptive theories of morality, but he nonetheless gives the impression that he considers liberal morality to be somewhat impoverished. Greene sees this attitude as thoroughly wrongheaded. Responding to Haidt’s metaphor comparing his Moral Foundations to taste buds—with the implication that the liberal palate is more limited in the range of flavors it can appreciate—Greene writes,

The great philosophers of the Enlightenment wrote at a time when the world was rapidly shrinking, forcing them to wonder whether their own laws, their own traditions, and their own God(s) were any better than anyone else’s. They wrote at a time when technology (e.g., ships) and consequent economic productivity (e.g., global trade) put wealth and power into the hands of a rising educated class, with incentives to question the traditional authorities of king and church. Finally, at this time, natural science was making the world comprehensible in secular terms, revealing universal natural laws and overturning ancient religious doctrines. Philosophers wondered whether there might also be universal moral laws, ones that, like Newton’s law of gravitation, applied to members of all tribes, whether or not they knew it. Thus, the Enlightenment philosophers were not arbitrarily shedding moral taste buds. They were looking for deeper, universal moral truths, and for good reason. They were looking for moral truths beyond the teachings of any particular religion and beyond the will of any earthly king. They were looking for what I’ve called a metamorality: a pan-tribal, or post-tribal, philosophy to govern life on the new pastures. (338-9)

While Haidt insists we must recognize the centrality of intuitions even in this civilization nominally ruled by reason, Greene points out that it was skepticism of old, seemingly unassailable and intuitive truths that opened up the world and made modern industrial civilization possible in the first place.

As Haidt explains, though, conservative morality serves people well in certain regards. Christian churches, for instance, encourage charity and foster a sense of community few secular institutions can match. But these advantages at the level of parochial groups have to be weighed against the problems tribalism inevitably leads to at higher levels. This is, in fact, precisely the point Greene created his Parable of the New Pastures to make. He writes,

The Tragedy of the Commons is averted by a suite of automatic settings—moral emotions that motivate and stabilize cooperation within limited groups. But the Tragedy of Commonsense Morality arises because of automatic settings, because different tribes have different automatic settings, causing them to see the world through different moral lenses. The Tragedy of the Commons is a tragedy of selfishness, but the Tragedy of Commonsense Morality is a tragedy of moral inflexibility. There is strife on the new pastures not because herders are hopelessly selfish, immoral, or amoral, but because they cannot step outside their respective moral perspectives. How should they think? The answer is now obvious: They should shift into manual mode. (172)

Greene argues that whenever we, as a society, are faced with a moral controversy—as with issues like abortion, capital punishment, and tax policy—our intuitions will not suffice because our intuitions are the very basis of our disagreement.

Watching the conclusion to season four of Breaking Bad, most viewers probably responded to finding out that Walt had poisoned Brock by thinking that he’d become a monster—at least at first. Indeed, the currently dominant academic approach to art criticism involves taking a stance, both moral and political, with regard to a work’s models and messages. Writing for The New Yorker, Emily Nussbaum, for instance, disparages viewers of Breaking Bad for failing to condemn Walt, writing,

When Brock was near death in the I.C.U., I spent hours arguing with friends about who was responsible. To my surprise, some of the most hard-nosed cynics thought it inconceivable that it could be Walt—that might make the show impossible to take, they said. But, of course, it did nothing of the sort. Once the truth came out, and Brock recovered, I read posts insisting that Walt was so discerning, so careful with the dosage, that Brock could never have died. The audience has been trained by cable television to react this way: to hate the nagging wives, the dumb civilians, who might sour the fun of masculine adventure. “Breaking Bad” increases that cognitive dissonance, turning some viewers into not merely fans but enablers. (83)

To arrive at such an assessment, Nussbaum must reduce the show to the impact she assumes it will have on less sophisticated fans’ attitudes and judgments. But the really troubling aspect of this type of criticism is that it encourages scholars and critics to indulge their impulse toward self-righteousness when faced with challenging moral dilemmas; in other words, it encourages them to give voice to their automatic modes precisely when they should be shifting to manual mode. Thus, Nussbaum neglects outright the very details that make Walt’s scenario compelling, completely forgetting that by making Brock sick—and, yes, risking his life—he was able to save Hank, Skyler, and his own two children.

But how should we go about arriving at a resolution to moral dilemmas and political controversies if we agree we can’t always trust our intuitions? Greene believes that, while our automatic modes recognize certain acts as wrong and certain others as a matter of duty to perform, in keeping with deontological ethics, whenever we switch to manual mode, the focus shifts to weighing the relative desirability of each option's outcomes. In other words, manual mode thinking is consequentialist. And, since we tend to assess outcomes according their impact on other people, favoring those that improve the quality of their experiences the most, or detract from it the least, Greene argues that whenever we slow down and think through moral dilemmas deliberately we become utilitarians. He writes,

If I’m right, this convergence between what seems like the right moral philosophy (from a certain perspective) and what seems like the right moral psychology (from a certain perspective) is no accident. If I’m right, Bentham and Mill did something fundamentally different from all of their predecessors, both philosophically and psychologically. They transcended the limitations of commonsense morality by turning the problem of morality (almost) entirely over to manual mode. They put aside their inflexible automatic settings and instead asked two very abstract questions. First: What really matters? Second: What is the essence of morality? They concluded that experience is what ultimately matters, and that impartiality is the essence of morality. Combing these two ideas, we get utilitarianism: We should maximize the quality of our experience, giving equal weight to the experience of each person. (173)

If you cite an authority recognized only by your own tribe—say, the Bible—in support of a moral argument, then members of other tribes will either simply discount your position or counter it with pronouncements by their own authorities. If, on the other hand, you argue for a law or a policy by citing evidence that implementing it would mean longer, healthier, happier lives for the citizens it affects, then only those seeking to establish the dominion of their own tribe can discount your position (which of course isn’t to say they can’t offer rival interpretations of your evidence).

If we turn commonsense morality on its head and evaluate the consequences of giving our intuitions priority over utilitarian accounting, we can find countless circumstances in which being overly moral is to everyone’s detriment. Ideas of justice and fairness allow far too much space for selfish and tribal biases, whereas the math behind mutually optimal outcomes based on compromise tends to be harder to fudge. Greene reports, for instance, the findings of a series of experiments conducted by Fieke Harinick and colleagues at the University of Amsterdam in 2000. Negotiations by lawyers representing either the prosecution or the defense were told to either focus on serving justice or on getting the best outcome for their clients. The negotiations in the first condition almost always came to loggerheads. Greene explains,

Thus, two selfish and rational negotiators who see that their positions are symmetrical will be willing to enlarge the pie, and then split the pie evenly. However, if negotiators are seeking justice, rather than merely looking out for their bottom lines, then other, more ambiguous, considerations come into play, and with them the opportunity for biased fairness. Maybe your clients really deserve lighter penalties. Or maybe the defendants you’re prosecuting really deserve stiffer penalties. There is a range of plausible views about what’s truly fair in these cases, and you can choose among them to suit your interests. By contrast, if it’s just a matter of getting the best deal you can from someone who’s just trying to get the best deal for himself, there’s a lot less wiggle room, and a lot less opportunity for biased fairness to create an impasse. (88)

Framing an argument as an effort to establish who was right and who was wrong is like drawing a line in the sand—it activates tribal attitudes pitting us against them, while treating negotiations more like an economic exchange circumvents these tribal biases.

Challenges to Utilitarianism

But do we really want to suppress our automatic moral reactions in favor of deliberative accountings of the greatest good for the greatest number? Deontologists have posed some effective challenges to utilitarianism in the form of thought-experiments that seem to show efforts to improve the quality of experiences would lead to atrocities. For instance, Greene recounts how in a high school debate, he was confronted by a hypothetical surgeon who could save five sick people by killing one healthy one. Then there’s the so-called Utility Monster, who experiences such happiness when eating humans that it quantitatively outweighs the suffering of those being eaten. More down-to-earth examples feature a scapegoat convicted of a crime to prevent rioting by people who are angry about police ineptitude, and the use of torture to extract information from a prisoner that could prevent a terrorist attack. The most influential challenge to utilitarianism, however, was leveled by the political philosopher John Rawls when he pointed out that it could be used to justify the enslavement of a minority by a majority.

Greene’s responses to these criticisms make up one of the most surprising, important, and fascinating parts of Moral Tribes. First, highly contrived thought-experiments about Utility Monsters and circumstances in which pushing a guy off a bridge is guaranteed to stop a trolley may indeed prove that utilitarianism is not true in any absolute sense. But whether or not such moral absolutes even exist is a contentious issue in its own right. Greene explains,

I am not claiming that utilitarianism is the absolute moral truth. Instead I’m claiming that it’s a good metamorality, a good standard for resolving moral disagreements in the real world. As long as utilitarianism doesn’t endorse things like slavery in the real world, that’s good enough. (275-6)

One source of confusion regarding the slavery issue is the equation of happiness with money; slave owners probably could make profits in excess of the losses sustained by the slaves. But money is often a poor index of happiness. Greene underscores this point by asking us to consider how much someone would have to pay us to sell ourselves into slavery. “In the real world,” he writes, “oppression offers only modest gains in happiness to the oppressors while heaping misery upon the oppressed” (284-5).

Another failing of the thought-experiments thought to undermine utilitarianism is the shortsightedness of the supposedly obvious responses. The crimes of the murderous doctor and the scapegoating law officers may indeed produce short-term increases in happiness, but if the secret gets out healthy and innocent people will live in fear, knowing they can’t trust doctors and law officers. The same logic applies to the objection that utilitarianism would force us to become infinitely charitable, since we can almost always afford to be more generous than we currently are. But how long could we serve as so-called happiness pumps before burning out, becoming miserable, and thus lose the capacity for making anyone else happier? Greene writes,

If what utilitarianism asks of you seems absurd, then it’s not what utilitarianism actually asks of you. Utilitarianism is, once again, an inherently practical philosophy, and there’s nothing more impractical than commanding free people to do things that strike them as absurd and that run counter to their most basic motivations. Thus, in the real world, utilitarianism is demanding, but not overly demanding. It can accommodate our basic human needs and motivations, but it nonetheless calls for substantial reform of our selfish habits. (258)

Greene seems to be endorsing what philosophers call "rule utilitarianism." We can approach every choice by calculating the likely outcomes, but as a society we would be better served deciding on some rules for everyone to adhere to. It just may be possible for a doctor to increase happiness through murder in a particular set of circumstances—but most people would vociferously object to a rule legitimizing the practice.

The concept of human rights may present another challenge to Greene in his championing of consequentialism over deontology. It is our duty, after all, to recognize the rights of every human, and we ourselves have no right to disregard someone else’s rights no matter what benefit we believe might result from doing so. In his book The Better Angels of our Nature, Steven Pinker, Greene’s colleague at Harvard, attributes much of the steep decline in rates of violent death over the past three centuries to a series of what he calls Rights Revolutions, the first of which began during the Enlightenment. But the problem with arguments that refer to rights, Greene explains, is that controversies arise for the very reason that people don’t agree which rights we should recognize. He writes,

Thus, appeals to “rights” function as an intellectual free pass, a trump card that renders evidence irrelevant. Whatever you and your fellow tribespeople feel, you can always posit the existence of a right that corresponds to your feelings. If you feel that abortion is wrong, you can talk about a “right to life.” If you feel that outlawing abortion is wrong, you can talk about a “right to choose.” If you’re Iran, you can talk about your “nuclear rights,” and if you’re Israel you can talk about your “right to self-defense.” “Rights” are nothing short of brilliant. They allow us to rationalize our gut feelings without doing any additional work. (302)

The only way to resolve controversies over which rights we should actually recognize and which rights we should prioritize over others, Greene argues, is to apply utilitarian reasoning.

Ideology and Epistemology

In his discussion of the proper use of the language of rights, Greene comes closer than in other section of Moral Tribes to explicitly articulating what strikes me as the most revolutionary idea that he and his fellow moral psychologists are suggesting—albeit as of yet only implicitly. In his advocacy for what he calls “deep pragmatism,” Greene isn’t merely applying evolutionary theories to an old philosophical debate; he’s actually posing a subtly different question. The numerous thought-experiments philosophers use to poke holes in utilitarianism may not have much relevance in the real world—but they do undermine any claim utilitarianism may have on absolute moral truth. Greene’s approach is therefore to eschew any effort to arrive at absolute truths, including truths pertaining to rights. Instead, in much the same way scientists accept that our knowledge of the natural world is mediated by theories, which only approach the truth asymptotically, never capturing it with any finality, Greene intimates that the important task in the realm of moral philosophy isn’t to arrive at a full accounting of moral truths but rather to establish a process for resolving moral and political dilemmas.

What’s needed, in other words, isn’t a rock solid moral ideology but a workable moral epistemology. And, just as empiricism serves as the foundation of the epistemology of science, Greene makes a convincing case that we could use utilitarianism as the basis of an epistemology of morality. Pursuing the analogy between scientific and moral epistemologies even farther, we can compare theories, which stand or fall according to their empirical support, to individual human rights, which we afford and affirm according to their impact on the collective happiness of every individual in the society. Greene writes,

If we are truly interested in persuading our opponents with reason, then we should eschew the language of rights. This is, once again, because we have no non-question-begging (and utilitarian) way of figuring out which rights really exist and which rights take precedence over others. But when it’s not worth arguing—either because the question has been settled or because our opponents can’t be reasoned with—then it’s time to start rallying the troops. It’s time to affirm our moral commitments, not with wonky estimates of probabilities but with words that stir our souls. (308-9)

Rights may be the closest thing we have to moral truths, just as theories serve as our stand-ins for truths about the natural world, but even more important than rights or theories are the processes we rely on to establish and revise them.

A New Theory of Narrative

As if a philosophical revolution weren’t enough, moral psychology is also putting in place what could be the foundation of a new understanding of the role of narratives in human lives. At the heart of every story is a conflict between competing moral ideals. In commercial fiction, there tends to be a character representing each side of the conflict, and audiences can be counted on to favor one side over the other—the good, altruistic guys over the bad, selfish guys. In more literary fiction, on the other hand, individual characters are faced with dilemmas pitting various modes of moral thinking against each other. In season one of Breaking Bad, for instance, Walter White famously writes a list on a notepad of the pros and cons of murdering the drug dealer restrained in Jesse’s basement. Everyone, including Walt, feels that killing the man is wrong, but if they let him go Walt and his family will at risk of retaliation. This dilemma is in fact quite similar to ones he faces in each of the following seasons, right up until he has to decide whether or not to poison Brock. Trying to work out what Walt should do, and anxiously anticipating what he will do, are mental exercises few can help engaging in as they watch the show.

The current reigning conception of narrative in academia explains the appeal of stories by suggesting it derives from their tendency to fulfill conscious and unconscious desires, most troublesomely our desires to have our prejudices reinforced. We like to see men behaving in ways stereotypically male, women stereotypically female, minorities stereotypically black or Hispanic, and so on. Cultural products like works of art, and even scientific findings, are embraced, the reasoning goes, because they cement the hegemony of various dominant categories of people within the society. This tradition in arts scholarship and criticism can in large part be traced back to psychoanalysis, but it has developed over the last century to incorporate both the predominant view of language in the humanities and the cultural determinism espoused by many scholars in the service of various forms of identity politics.

The postmodern ideology that emerged from the convergence of these schools is marked by a suspicion that science is often little more than a veiled effort at buttressing the political status quo, and its preeminent thinkers deliberately set themselves apart from the humanist and Enlightenment traditions that held sway in academia until the middle of the last century by writing in byzantine, incoherent prose. Even though there could be no rational way either to support or challenge postmodern ideas, scholars still take them as cause for leveling accusations against both scientists and storytellers of using their work to further reactionary agendas.

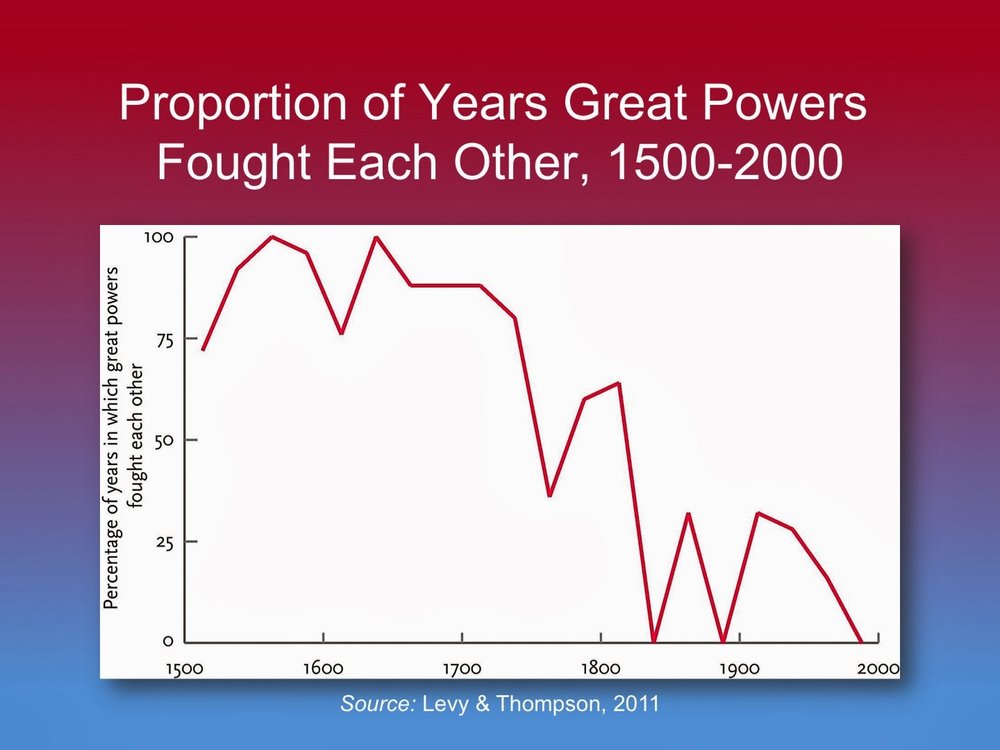

For anyone who recognizes the unparalleled power of science both to advance our understanding of the natural world and to improve the conditions of human lives, postmodernism stands out as a catastrophic wrong turn, not just in academic history but in the moral evolution of our civilization. The equation of narrative with fantasy is a bizarre fantasy in its own right. Attempting to explain the appeal of a show like Breaking Bad by suggesting that viewers have an unconscious urge to be diagnosed with cancer and to subsequently become drug manufacturers is symptomatic of intractable institutional delusion. And, as Pinker recounts in Better Angels, literature, and novels in particular, were likely instrumental in bringing about the shift in consciousness toward greater compassion for greater numbers of people that resulted in the unprecedented decline in violence beginning in the second half of the nineteenth century.

Yet, when it comes to arts scholarship, postmodernism is just about the only game in town. Granted, the writing in this tradition has progressed a great deal toward greater clarity, but the focus on identity politics has intensified to the point of hysteria: you’d be hard-pressed to find a major literary figure who hasn’t been accused of misogyny at one point or another, and any scientist who dares study something like gender differences can count on having her motives questioned and her work lampooned by well intentioned, well indoctrinated squads of well-poisoning liberal wags.

When Emily Nussbaum complains about viewers of Breaking Bad being lulled by the “masculine adventure” and the digs against “nagging wives” into becoming enablers of Walt’s bad behavior, she’s doing exactly what so many of us were taught to do in academic courses on literary and film criticism, applying a postmodern feminist ideology to the show—and completely missing the point. As the series opens, Walt is deliberately portrayed as downtrodden and frustrated, and Skyler’s bullying is an important part of that dynamic. But the pleasure of watching the show doesn’t come so much from seeing Walt get out from under Skyler’s thumb—he never really does—as it does from anticipating and fretting over how far Walt will go in his criminality, goaded on by all that pent-up frustration. Walt shows a similar concern for himself, worrying over what effect his exploits will have on who he is and how he will be remembered. We see this in season three when he becomes obsessed by the “contamination” of his lab—which turns out to be no more than a house fly—and at several other points as well. Viewers are not concerned with Walt because he serves as an avatar acting out their fantasies (or else the show would have a lot more nude scenes with the principal of the school he teaches in). They’re concerned because, at least at the beginning of the show, he seems to be a good person and they can sympathize with his tribulations.

The much more solidly grounded view of narrative inspired by moral psychology suggests that common themes in fiction are not reflections or reinforcements of some dominant culture, but rather emerge from aspects of our universal human psychology. Our feelings about characters, according to this view, aren’t determined by how well they coincide with our abstract prejudices; on the contrary, we favor the types of people in fiction we would favor in real life. Indeed, if the story is any good, we will have to remind ourselves that the people whose lives we’re tracking really are fictional. Greene doesn’t explore the intersection between moral psychology and narrative in Moral Tribes, but he does give a nod to what we can only hope will be a burgeoning field when he writes,

Nowhere is our concern for how others treat others more apparent than in our intense engagement with fiction. Were we purely selfish, we wouldn’t pay good money to hear a made-up story about a ragtag group of orphans who use their street smarts and quirky talents to outfox a criminal gang. We find stories about imaginary heroes and villains engrossing because they engage our social emotions, the ones that guide our reactions to real-life cooperators and rogues. We are not disinterested parties. (59)

Many people ask why we care so much about people who aren’t even real, but we only ever reflect on the fact that what we’re reading or viewing is a simulation when we’re not sufficiently engrossed by it.

Was Nussbaum completely wrong to insist that Walt went beyond the pale when he poisoned Brock? She certainly showed the type of myopia Greene attributes to the automatic mode by forgetting Walt saved at least four lives by endangering the one. But most viewers probably had a similar reaction. The trouble wasn’t that she was appalled; it was that her postmodernism encouraged her to unquestioningly embrace and give voice to her initial feelings. Greene writes,